Can a neural network learn to recognize doodling –

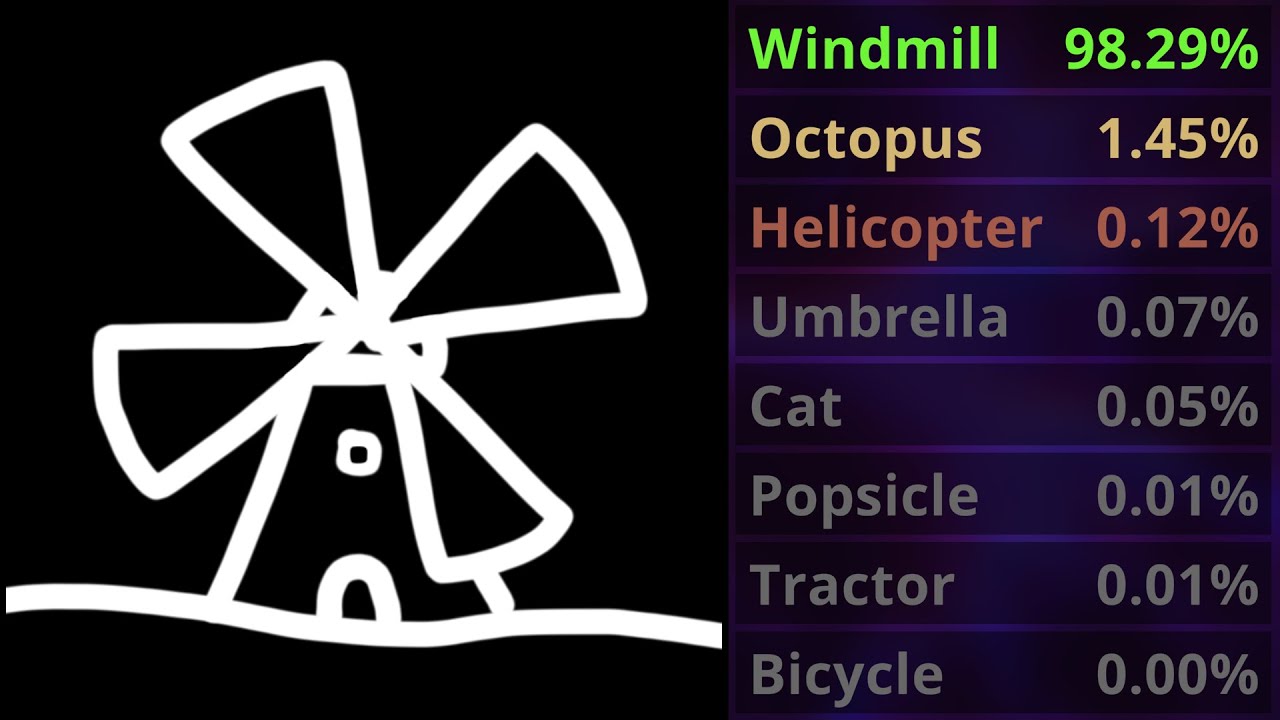

Can a neural network learn to recognize doodling? This question delves into the fascinating intersection of artificial intelligence and human creativity. Doodles, those seemingly simple scribbles we make while daydreaming or on the phone, are often dismissed as meaningless. However, they hold a surprising depth, revealing our subconscious thoughts, emotions, and even our unique artistic styles.

Neural networks, powerful algorithms inspired by the human brain, have already proven adept at recognizing patterns in images. But can they decipher the abstract and often subjective nature of doodles?

This article explores the challenges and possibilities of training a neural network to recognize doodles. We’ll delve into the inherent variability of doodles, the diverse styles and motifs they encompass, and the specific neural network architectures best suited for this task.

We’ll also examine the data acquisition and preprocessing techniques needed to train such a model, as well as the evaluation metrics used to assess its performance. Finally, we’ll explore the exciting applications of doodle recognition technology, from enhancing creative tools to revolutionizing user interface design.

*

Introduction

Neural networks are a type of artificial intelligence (AI) that mimic the structure and function of the human brain. They are composed of interconnected nodes, called neurons, which process and transmit information. Neural networks learn by adjusting the connections between these neurons based on input data, allowing them to recognize patterns and make predictions.Doodling, a form of spontaneous drawing, often involves simple shapes, lines, and abstract figures.

It can be characterized by its free-flowing nature, lack of formal structure, and often reflects the doodler’s thoughts and emotions. Doodles can vary widely in complexity and style, ranging from simple scribbles to intricate designs.This article explores the feasibility of training a neural network to recognize doodles.

By analyzing the characteristics and patterns present in doodles, we aim to determine if a neural network can effectively learn to differentiate doodles from other types of drawings and images.

Doodling Characteristics and Recognition Challenges

Doodles, as mentioned, are spontaneous and unstructured drawings. They often lack a clear subject or purpose, and their complexity can vary greatly. This inherent variability presents a challenge for recognizing doodles using traditional computer vision techniques.The recognition of doodles requires the neural network to identify specific characteristics, such as:

- Simple Shapes:Doodles often consist of basic shapes like circles, squares, triangles, and lines. The network needs to learn to identify these shapes and their variations.

- Abstract Figures:Doodles can include abstract figures that may not have a clear meaning or representation. The network should be able to recognize these figures as part of a doodle.

- Free-flowing Lines:Doodles often involve free-flowing lines that may be curved, jagged, or irregular. The network needs to identify these lines and their patterns.

- Lack of Detail:Doodles generally lack fine details and intricate features. The network should be able to recognize doodles even with limited visual information.

The Nature of Doodles

Doodles, those seemingly effortless scribbles and sketches that often appear on the margins of our notebooks or during phone calls, are more than just random marks on paper. They are a fascinating window into our subconscious minds, revealing our thoughts, emotions, and creative impulses.

Understanding the nature of doodles is crucial to appreciating their significance and unlocking their potential.

Training a neural network to recognize doodling is a fascinating challenge. It’s like teaching a computer to understand the abstract language of scribbles. Just like learning to play cribbage can be tricky at first, but becomes easier with practice, is cribbage hard to learn , a neural network can gradually improve its ability to identify patterns in doodles.

The key is to feed it a diverse dataset of examples, so it can learn the subtle variations in human creativity.

Variability and Subjectivity

Doodles are inherently variable and subjective, reflecting the unique personalities, moods, and experiences of their creators. The same doodle can be interpreted differently by different viewers, depending on their individual backgrounds and perspectives. For example, a simple heart doodle could symbolize love, affection, or even a broken heart, depending on the viewer’s personal experiences and cultural background.

Styles and Forms

The world of doodles is as diverse as the people who create them. Doodles can take on countless forms, ranging from simple geometric patterns to complex representational drawings. Some common doodle styles include:

- Geometric patterns: These often involve repeating shapes like circles, squares, triangles, or lines, creating visually appealing and often hypnotic designs.

- Abstract shapes: Abstract doodles can be free-flowing and expressive, resembling organic forms or abstract art.

- Representational drawings: Some doodles depict recognizable objects or figures, ranging from simple stick figures to detailed portraits.

- Text-based doodles: These doodles incorporate words or letters, often forming playful or meaningful arrangements.

The tools used to create doodles can also influence their style and form. Pencils, pens, markers, and digital tools each offer different possibilities for line weight, color, and texture, shaping the final outcome.

Common Motifs and Meanings

Certain doodle motifs appear repeatedly across cultures and time periods, suggesting a universal language of visual expression. These motifs often carry symbolic meanings, reflecting shared human experiences and emotions. Some common doodle motifs include:

| Motif | Potential Interpretations | Examples |

|---|---|---|

| Hearts | Love, affection, joy, sadness, heartbreak | Valentines Day cards, expressing feelings of love, drawing a broken heart to express sadness |

| Flowers | Beauty, nature, growth, new beginnings, hope | Decorative elements in art, expressing appreciation for nature, drawing flowers to symbolize hope |

| Faces | Emotions, personality, communication, self-expression | Representing different emotions like happiness, sadness, anger, creating characters in stories, expressing self-identity |

| Geometric shapes | Order, structure, balance, harmony, creativity | Designing patterns, creating abstract art, symbolizing different concepts like circles representing unity, squares representing stability |

The meaning of a doodle motif can vary depending on the context in which it is used. A heart drawn on a birthday card might express love and affection, while a heart drawn on a condolence card might symbolize grief and loss.

Writing

“The doodles that we create are like little windows into our minds, revealing our hidden thoughts, emotions, and dreams. They are a form of nonverbal communication, allowing us to express ourselves in ways that words sometimes cannot.”

Doodles are more than just idle scribbles; they are a reflection of our inner selves. They can reveal our hidden thoughts, emotions, and creative potential. They can be a source of comfort, a way to release stress, or a spark of inspiration.

In the quiet moments of our lives, when our minds are free to wander, doodles emerge, offering a glimpse into the depths of our creativity.

Neural Network Architectures

Neural networks are powerful tools for image recognition, and several architectures are particularly well-suited for this task. These architectures differ in their structure and capabilities, leading to varying strengths and limitations in recognizing doodles.

Convolutional Neural Networks (CNNs)

CNNs are the most popular architecture for image recognition due to their ability to learn hierarchical features. They excel at recognizing patterns and shapes, making them particularly effective for analyzing doodles.

CNNs are designed to process data in a grid-like format, similar to how images are structured.

- Feature Extraction:CNNs use convolutional layers to extract features from the image. These layers apply filters to the image, detecting patterns like edges, corners, and textures. The extracted features are then passed to subsequent layers, building up a hierarchical representation of the image.

- Pooling:Pooling layers reduce the dimensionality of the extracted features, making the network more efficient and less prone to overfitting. They perform downsampling by selecting the maximum or average value within a region of the feature map.

- Classification:Finally, fully connected layers are used to classify the extracted features. These layers combine the information from all the previous layers to make a prediction about the image’s content.

Recurrent Neural Networks (RNNs)

RNNs are designed to process sequential data, such as text or speech. They are less commonly used for image recognition, but they can be effective for recognizing doodles with complex spatial relationships.

RNNs use internal memory to maintain information about previous inputs, enabling them to process sequential data effectively.

- Sequence Modeling:RNNs are able to model the temporal dependencies within a sequence, allowing them to capture the relationships between different parts of a doodle. This is particularly useful for recognizing doodles with intricate structures or multiple strokes.

- Long Short-Term Memory (LSTM):LSTMs are a specialized type of RNN that can handle long-term dependencies in data. They are particularly effective for recognizing doodles with complex structures or sequences of actions.

Comparison: CNNs vs. RNNs

CNNs are generally preferred for doodle recognition due to their ability to learn hierarchical features and their efficiency in processing images. However, RNNs can be beneficial for recognizing doodles with complex spatial relationships or sequences of actions.

| Feature | CNN | RNN |

|---|---|---|

| Strengths | Excellent for recognizing patterns and shapes. Efficient image processing. | Effective for recognizing doodles with complex spatial relationships or sequences of actions. |

| Limitations | May struggle with recognizing doodles with complex spatial relationships or sequences of actions. | Less efficient for processing images compared to CNNs. |

Data Acquisition and Preprocessing

Training a neural network to recognize doodles requires a substantial and diverse dataset. This section delves into the challenges of acquiring such a dataset and the essential steps involved in preparing the data for training.

Data Acquisition

Obtaining a large and diverse dataset of doodles presents several challenges. * Variability in Doodle Styles:Doodles can vary significantly in terms of style, complexity, and subject matter. Capturing this diversity is crucial for training a robust model.

Limited Availability of Public Datasets

Publicly available datasets specifically designed for doodle recognition are relatively limited.

Data Collection Methods

Collecting a large dataset of doodles requires efficient and scalable data collection methods. This could involve crowdsourcing platforms, mobile applications, or specialized data collection tools.

Data Cleaning and Normalization

Once the data is collected, it’s essential to clean and normalize it to ensure optimal performance of the neural network. * Removing Irrelevant Data:This step involves removing any data points that are not doodles, such as images of text or other objects.

Handling Missing Data

Missing data can occur due to errors in the collection process or other factors. Imputation techniques can be used to fill in missing values.

Data Normalization

Normalizing the data ensures that all features are on the same scale. This can be achieved by techniques such as min-max scaling or standardization.

Data Preprocessing

The final step in preparing the data involves transforming it into a format suitable for training the neural network.* Image Resizing:All images should be resized to a consistent size to ensure compatibility with the neural network architecture.

Data Augmentation

Augmenting the data can improve the model’s generalization ability by introducing variations in the training set. This could involve techniques such as random cropping, rotation, and flipping.

Labeling

Each doodle image needs to be labeled with its corresponding category. This can be done manually or using automated labeling techniques.

Training and Evaluation: Can A Neural Network Learn To Recognize Doodling

This section delves into the crucial aspects of training and evaluating a neural network to recognize doodles. We’ll cover the data preparation, model architecture, training process, hyperparameter tuning, and evaluation metrics.

Training a Neural Network on a Doodle Dataset

Training a neural network to recognize doodles involves preparing the data, selecting a suitable model architecture, and defining the training process.

Data Preparation

- Data Source:The doodle dataset can be obtained from various sources, such as:

- Quick, Draw! Dataset:This dataset, created by Google, contains millions of doodles contributed by users, providing a rich source of diverse and labeled doodle data.

- Other Public Datasets:Several other public datasets, like the “Doodle Recognition Dataset” or “Sketch-a-Net Dataset,” may also be available for training doodle recognition models.

- Custom Dataset:You can also create a custom dataset by collecting doodles from your own users or by using a tool like Google’s “Quick, Draw!” to generate your own labeled doodles.

- Data Format:The doodle dataset is typically in the format of images, usually in standard image formats like JPEG or PNG. Each image represents a single doodle, and the associated label indicates the object depicted in the doodle.

- Data Preprocessing:Before training the model, the doodle images need to be preprocessed to ensure consistency and optimize performance. Common preprocessing steps include:

- Resizing Images:All images are resized to a uniform size, ensuring that the model receives inputs of the same dimensions.

- Normalization:The pixel values of the images are normalized to a specific range (e.g., 0 to 1), preventing issues related to differing pixel value scales.

- Data Augmentation:Techniques like random rotations, flips, and zooms can be applied to increase the size and diversity of the training data, improving the model’s generalization ability.

Model Architecture

- Convolutional Neural Network (CNN):CNNs are particularly well-suited for image recognition tasks, including doodle recognition. They excel at extracting features from images, making them ideal for capturing the intricate patterns and shapes present in doodles.

- Recurrent Neural Network (RNN):RNNs are adept at handling sequential data, which can be useful for recognizing doodles that involve multiple strokes or have a sequential drawing pattern. However, CNNs are often preferred for doodle recognition due to their ability to extract spatial features.

Training Process

- Loss Function:A loss function quantifies the difference between the model’s predictions and the actual labels. Common loss functions for image classification tasks include:

- Categorical Cross-Entropy:This loss function is suitable for multi-class classification problems, where the model predicts the probability of a doodle belonging to each class.

- Optimizer:An optimizer updates the model’s weights during training to minimize the loss function. Popular optimizers include:

- Stochastic Gradient Descent (SGD):A basic but effective optimizer that updates weights based on the gradient of the loss function.

- Adam:A more sophisticated optimizer that adapts the learning rate for each parameter, often leading to faster convergence.

- Batch Size:The number of doodles processed in each training iteration. A larger batch size can speed up training but may require more memory. A common batch size is 32 or 64.

- Epochs:The number of times the entire training dataset is iterated over during training. More epochs allow the model to learn more complex patterns but can lead to overfitting if the model is trained for too long.

Code Example

“`pythonimport tensorflow as tf# Define the model architecturemodel = tf.keras.models.Sequential([ tf.keras.layers.Conv2D(32, (3, 3), activation=’relu’, input_shape=(28, 28, 1)), tf.keras.layers.MaxPooling2D((2, 2)), tf.keras.layers.Conv2D(64, (3, 3), activation=’relu’), tf.keras.layers.MaxPooling2D((2, 2)), tf.keras.layers.Flatten(), tf.keras.layers.Dense(10, activation=’softmax’)])# Compile the modelmodel.compile(optimizer=’adam’, loss=’categorical_crossentropy’, metrics=[‘accuracy’])# Train the modelmodel.fit(x_train, y_train, epochs=10, batch_size=32)“`This code snippet demonstrates the training process using TensorFlow.

It defines a simple CNN architecture, compiles the model with the Adam optimizer and categorical cross-entropy loss, and trains the model for 10 epochs with a batch size of 32.

Hyperparameter Tuning to Optimize Performance

Hyperparameter tuning is essential for optimizing the model’s performance by finding the best combination of hyperparameters.

Hyperparameter Selection

- Learning Rate:Controls the step size taken during weight updates. A smaller learning rate can lead to slower convergence but may prevent the model from getting stuck in local minima.

- Number of Layers:Increasing the number of layers can improve the model’s ability to learn complex patterns but can also increase the risk of overfitting.

- Number of Neurons:The number of neurons in each layer affects the model’s capacity. More neurons can improve performance but can also increase computational cost.

- Activation Function:The activation function determines the output of each neuron. Different activation functions can lead to different model behaviors.

Tuning Techniques

- Grid Search:Exhaustively searches a predefined range of hyperparameter values, evaluating the model’s performance for each combination.

- Random Search:Randomly samples hyperparameter values from a predefined distribution, potentially finding better combinations than grid search.

- Bayesian Optimization:Uses a probabilistic model to guide the search for optimal hyperparameters, efficiently exploring the search space.

Performance Metrics

- Accuracy:The proportion of correctly classified doodles to the total number of doodles. It’s a simple and widely used metric, but it doesn’t provide insights into the types of errors the model makes.

- Precision:The proportion of correctly classified doodles among all doodles predicted as belonging to a specific class. It’s important for minimizing false positives.

- Recall:The proportion of correctly classified doodles among all doodles that actually belong to a specific class. It’s important for minimizing false negatives.

- F1-Score:The harmonic mean of precision and recall, providing a balanced metric that considers both false positives and false negatives.

Code Example

“`pythonfrom keras_tuner import RandomSearchfrom tensorflow.keras.models import Sequentialfrom tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Densedef build_model(hp): model = Sequential() model.add(Conv2D(hp.Int(‘units’, min_value=32, max_value=128, step=32), (3, 3), activation=’relu’, input_shape=(28, 28, 1))) model.add(MaxPooling2D((2, 2))) model.add(Flatten()) model.add(Dense(hp.Int(‘units’, min_value=32, max_value=128, step=32), activation=’relu’)) model.add(Dense(10, activation=’softmax’)) model.compile(optimizer=tf.keras.optimizers.Adam(hp.Float(‘learning_rate’, min_value=1e-4, max_value=1e-2, sampling=’log’)), loss=’categorical_crossentropy’, metrics=[‘accuracy’]) return modeltuner = RandomSearch( build_model, objective=’val_accuracy’, max_trials=5, executions_per_trial=3, directory=’my_tuner’, project_name=’doodle_recognition’)tuner.search(x_train, y_train, epochs=10, validation_split=0.2)best_hps = tuner.get_best_hyperparameters(num_trials=1)[0]print(f”Best hyperparameters: best_hps.values”)“`This code snippet uses Keras Tuner for random search hyperparameter tuning.

It defines a model building function with hyperparameters to be tuned and uses the `RandomSearch` class to search for the best hyperparameter combination based on validation accuracy.

Evaluation Metrics for Recognizing Doodles

Evaluating the performance of a doodle recognition model involves using various metrics to assess its ability to correctly classify doodles.

Accuracy

Accuracy measures the overall correctness of the model’s predictions. A high accuracy indicates that the model is performing well in general. However, accuracy alone can be misleading, especially when dealing with imbalanced datasets or when the cost of false positives or false negatives is unequal.

Precision

Precision measures the proportion of correctly classified doodles among all doodles predicted as belonging to a specific class. A high precision is important when minimizing false positives is crucial, such as in medical diagnosis or spam detection.

Recall

Recall measures the proportion of correctly classified doodles among all doodles that actually belong to a specific class. A high recall is important when minimizing false negatives is crucial, such as in fraud detection or disease screening.

F1-Score

The F1-score is the harmonic mean of precision and recall. It provides a balanced metric that considers both false positives and false negatives. A high F1-score indicates that the model is performing well in terms of both precision and recall.

Confusion Matrix

A confusion matrix is a visual representation of the model’s performance in classifying doodles. It shows the number of correctly classified doodles and the number of misclassifications for each class. Analyzing the confusion matrix can provide insights into the types of errors the model makes, helping to identify areas for improvement.

Code Example

“`pythonfrom sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score, confusion_matrix# Predict the labels for the test sety_pred = model.predict(x_test)# Convert predicted probabilities to class labelsy_pred_classes = np.argmax(y_pred, axis=1)# Calculate evaluation metricsaccuracy = accuracy_score(y_test, y_pred_classes)precision = precision_score(y_test, y_pred_classes, average=’macro’)recall = recall_score(y_test, y_pred_classes, average=’macro’)f1 = f1_score(y_test, y_pred_classes, average=’macro’)confusion_matrix = confusion_matrix(y_test, y_pred_classes)print(f”Accuracy: accuracy”)print(f”Precision: precision”)print(f”Recall: recall”)print(f”F1-Score: f1″)print(f”Confusion Matrix:\nconfusion_matrix”)“`This code snippet demonstrates how to calculate and visualize evaluation metrics using scikit-learn.

It predicts the labels for the test set, converts predicted probabilities to class labels, and calculates accuracy, precision, recall, F1-score, and confusion matrix.

6. Challenges and Limitations

Training a neural network to recognize doodles presents unique challenges, primarily due to the inherent variability and ambiguity present in doodle data. These challenges directly impact the model’s performance and require careful consideration during the training process.

6.1. Training Challenges

The training process for a neural network designed to recognize doodles faces several unique challenges stemming from the nature of doodle data.

- Variability in Drawing Style: Doodles are highly subjective, with individuals employing diverse drawing styles, stroke thickness, and levels of detail. This variability makes it difficult for a neural network to generalize across different drawing styles and recognize the same doodle drawn by different individuals.

- Ambiguity in Interpretation: The interpretation of doodles can be ambiguous, as different individuals might perceive the same doodle differently. This ambiguity makes it challenging for a neural network to learn a consistent representation of doodles and accurately classify them.

- Presence of Noise: Doodle data often contains noise, such as shaky lines, accidental marks, or incomplete strokes. This noise can introduce errors in the training process, leading to the model learning incorrect patterns and reducing its accuracy.

6.2. Impact of Noise and Variability

The presence of noise and variability in doodle data can significantly impact the performance of a neural network model.

| Noise Type | Impact on Model Performance | Example |

|---|---|---|

| Shaky Lines | Can introduce errors in the shape and structure of the doodle, leading to misclassification. | A doodle of a circle with shaky lines might be misclassified as a squiggle. |

| Accidental Marks | Can introduce spurious features into the doodle, confusing the model. | A doodle of a heart with an accidental mark near it might be misclassified as a different shape. |

| Incomplete Strokes | Can make it difficult for the model to recognize the complete shape of the doodle. | A doodle of a square with an incomplete side might be misclassified as a triangle. |

The variability in doodle styles can also affect the generalization capabilities of a trained model. A model trained on doodles drawn with a specific style might struggle to recognize doodles drawn in a different style. For instance, a model trained on detailed and precise doodles might perform poorly on simpler and more abstract doodles.

6.3. Limitations of Current Architectures

Current convolutional neural network (CNN) architectures, while effective for image recognition tasks, face limitations when handling complex doodle patterns.

- Difficulty in Capturing Intricate Shapes: CNNs rely on local receptive fields, which can make it challenging to capture the global structure of intricate doodle shapes. This limitation can lead to misclassifications when dealing with doodles containing complex geometries or overlapping elements.

- Limited Ability to Handle Overlapping Elements: CNNs struggle to differentiate between overlapping elements in doodles, potentially leading to misinterpretations. This is particularly relevant when doodles contain multiple objects or intricate designs with overlapping components.

To address these limitations, researchers are exploring architectural modifications, such as incorporating attention mechanisms or using more sophisticated feature extraction techniques. These modifications aim to improve the ability of CNNs to capture complex doodle features and handle overlapping elements more effectively.

6.4. Writing, Can a neural network learn to recognize doodling

Training a neural network to recognize doodles presents significant challenges due to the inherent variability and ambiguity in doodle data, as well as the presence of noise. Addressing these challenges requires robust solutions, such as incorporating data augmentation techniques to handle variability, developing noise-resistant models, and exploring advanced architectures that can effectively capture complex doodle patterns.

7. Applications and Potential

The ability of a neural network to recognize doodles opens up a wide range of exciting applications across various domains, from creative tools to educational software and user interface design. This technology has the potential to revolutionize how we interact with computers and create new and innovative experiences.

7.1. Creative Tools

A doodle-recognizing neural network can significantly enhance drawing applications, providing users with a more intuitive and powerful creative experience.

- Automatic shape recognition: The network can automatically identify and classify basic shapes, such as circles, squares, and triangles, within a user’s doodle. This can streamline the drawing process, allowing users to quickly create complex shapes without having to manually draw them.

For example, a user could simply doodle a rough Artikel of a house, and the application could automatically generate a perfectly formed house shape.

- Color suggestion: Based on the content and style of a doodle, the neural network can suggest appropriate colors for different parts of the drawing. This can help users to create visually appealing and harmonious color palettes, even if they are not experienced with color theory.

For instance, if a user doodles a sunset, the application could suggest warm colors like orange, red, and yellow, or if they doodle a forest, it could suggest shades of green and brown.

- Style transfer: The neural network can be trained on various artistic styles, allowing users to transform their doodles into different art forms. For example, a user could doodle a simple landscape, and the application could then convert it into a realistic painting, a cartoon, or an abstract piece.

This feature can empower users to explore different creative expressions and experiment with various artistic styles.

The integration of a doodle-recognizing neural network can enhance the user experience for both novice and professional artists. Novice artists can benefit from the automated shape recognition and color suggestion features, which can help them create more polished drawings. Professional artists can use the style transfer feature to experiment with new artistic styles and create unique works of art.

7.2. Educational Software

Doodle recognition technology can be effectively incorporated into educational software for children, providing a fun and engaging way to learn.

- Interactive drawing games: Doodle-recognizing neural networks can power interactive drawing games where children can draw objects or characters that the software can recognize and respond to. This can create a more dynamic and engaging learning experience, encouraging children to explore their creativity and learn new concepts.

For example, a game could ask children to draw different animals, and the software could provide feedback based on the accuracy of their drawings.

- Personalized feedback on drawing skills: The network can analyze children’s doodles and provide personalized feedback on their drawing skills, identifying areas where they can improve. This can help children to develop their fine motor skills, hand-eye coordination, and artistic abilities. For example, the software could identify if a child is struggling to draw straight lines or if their proportions are off, and provide targeted feedback to help them improve.

- Gamified learning experiences: Doodle recognition can be used to create gamified learning experiences that make learning fun and engaging for children. For instance, a game could ask children to draw different shapes or objects to solve puzzles or complete challenges. This can encourage children to learn new concepts while having fun, making learning more enjoyable and effective.

This technology can be used to improve children’s creativity, problem-solving abilities, and fine motor skills. By providing personalized feedback and engaging learning experiences, doodle-recognizing neural networks can foster a love of learning and encourage children to explore their creative potential.

7.3. User Interface Design

Doodle recognition can be a powerful tool for enhancing user interface design, creating more intuitive and engaging user interactions.

- Gesture-based navigation: The network can be used to recognize user gestures, allowing for more natural and intuitive navigation within applications. For example, users could draw a circle to zoom in on a specific area of a map or draw a line to scroll through a list of items.

- Personalized UI elements: Doodle recognition can be used to create personalized UI elements that adapt to the user’s preferences and drawing style. For example, a user could doodle a custom button or icon that is then incorporated into the application’s interface.

- Intuitive drawing-based input methods: Doodle recognition can enable intuitive drawing-based input methods, allowing users to interact with applications using their drawings. For example, a user could doodle a simple diagram to create a flowchart or a sketch to illustrate a concept.

By integrating doodle recognition into user interface design, developers can create more engaging and user-friendly experiences. This can lead to increased user satisfaction, improved productivity, and more intuitive interactions with digital devices.

7.4. Future Advancements

The field of doodle recognition is constantly evolving, with ongoing research and development efforts pushing the boundaries of what is possible.

- Real-time recognition: Future advancements in doodle recognition technology will likely enable real-time recognition, allowing for instant feedback and interaction. This could revolutionize how we interact with computers, enabling us to control devices with our drawings in real time.

- Multi-modal input: Doodle recognition could be integrated with other input modalities, such as voice recognition or touch input, creating a more comprehensive and intuitive user experience. This could lead to the development of more sophisticated and powerful applications that combine different forms of input.

- Integration with other AI technologies: Doodle recognition can be integrated with other AI technologies, such as natural language processing or computer vision, to create even more powerful and versatile applications. For example, a doodle-recognizing neural network could be combined with a natural language processing system to allow users to interact with computers using a combination of drawings and spoken language.

These advancements have the potential to revolutionize various industries and applications. For example, real-time doodle recognition could be used in medical imaging to allow doctors to quickly and accurately analyze patient scans. Multi-modal input could be used in virtual reality to create more immersive and interactive experiences.

And the integration with other AI technologies could lead to the development of new and innovative applications that are not yet imaginable.

Common Queries

What are some real-world applications of doodle recognition?

Doodle recognition has the potential to revolutionize various fields, including:

- Creative Tools:Imagine drawing apps that automatically recognize shapes, suggest colors, and even help you translate your doodles into different artistic styles.

- Educational Software:Doodle recognition could power interactive drawing games for children, provide personalized feedback on their art skills, and create engaging learning experiences.

- User Interface Design:Doodle recognition could enable more intuitive and engaging user interactions, such as gesture-based navigation and personalized UI elements.

How accurate are current doodle recognition models?

The accuracy of doodle recognition models is still under development and depends on factors like the complexity of the doodles, the quality of the training data, and the specific neural network architecture used. While significant progress has been made, achieving high accuracy for recognizing diverse and complex doodles remains a challenge.

What are the ethical considerations surrounding doodle recognition?

As with any AI technology, ethical considerations are crucial. For example, it’s important to ensure that doodle recognition models don’t perpetuate biases or misinterpret doodles in ways that could be harmful or discriminatory.