A/B testing machine learning sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail and brimming with originality from the outset. It’s the art of scientifically comparing different versions of your machine learning model to discover which performs best.

This process is vital for any data scientist or developer who wants to build the most effective and efficient models.

Imagine you’re trying to build a recommendation engine for an online store. You could use A/B testing to compare different algorithms, hyperparameter settings, or even the way recommendations are displayed. By testing different versions and measuring their performance, you can identify the best approach for your specific goals.

Introduction to A/B Testing in Machine Learning

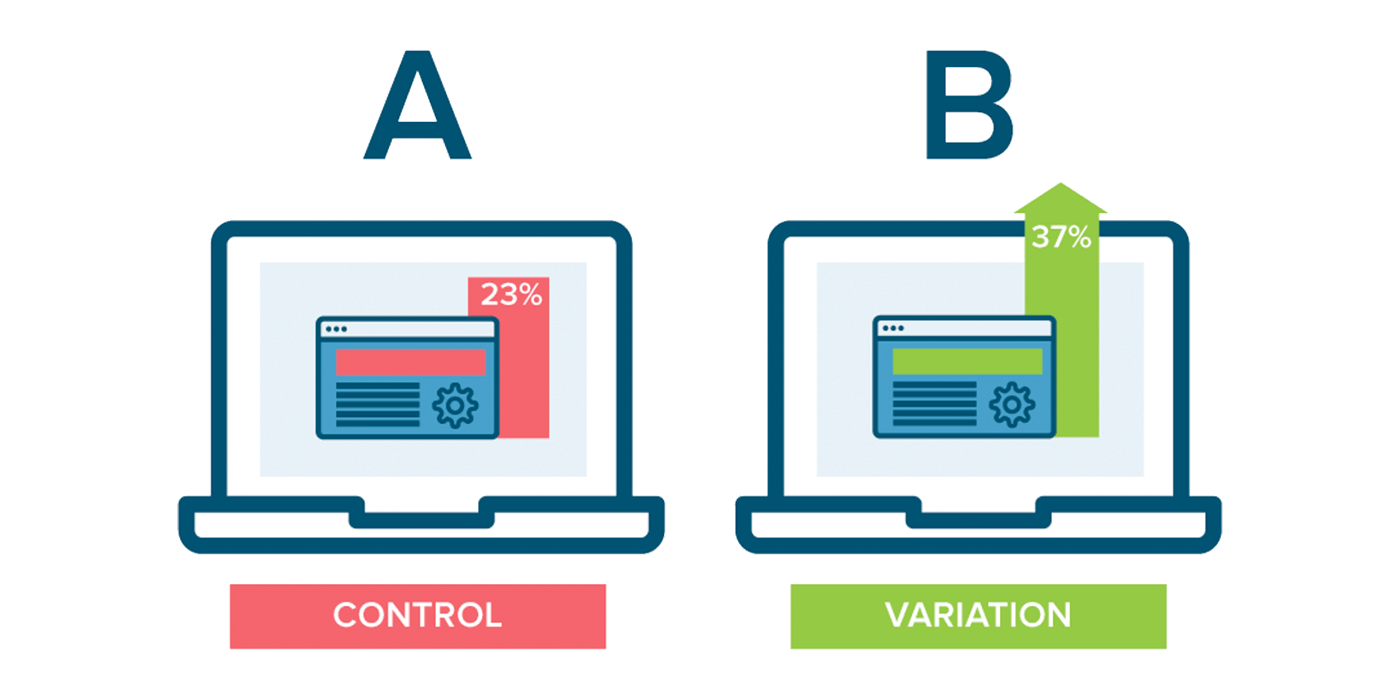

A/B testing, a cornerstone of data-driven decision making, plays a crucial role in optimizing machine learning models. It involves comparing two versions of a model, often referred to as “A” and “B,” to determine which performs better based on a specific metric.

This methodical approach allows for the evaluation and refinement of machine learning models, leading to improved accuracy, efficiency, and ultimately, better outcomes.

Purpose and Benefits of A/B Testing

A/B testing in machine learning serves a multifaceted purpose, primarily aimed at enhancing model performance and identifying the most effective configurations. Here’s a breakdown of its key benefits:

- Model Optimization:A/B testing enables the comparison of different model architectures, hyperparameters, and training data variations to identify the configuration that yields the best results for a given task.

- Performance Evaluation:By comparing the performance of different model versions, A/B testing provides objective insights into their strengths and weaknesses, allowing for informed decision-making regarding model selection and deployment.

- Data-Driven Insights:A/B testing provides empirical evidence for model improvements, supporting data-driven decisions and minimizing reliance on subjective assessments or intuition.

- Reduced Risk:By testing new model configurations in a controlled environment, A/B testing helps mitigate the risks associated with deploying untested models, ensuring a smoother transition and minimizing potential negative impacts.

- Continuous Improvement:A/B testing fosters a culture of continuous improvement, encouraging ongoing model refinement and optimization based on real-world data and performance metrics.

Real-World Examples of A/B Testing in Machine Learning

A/B testing finds practical applications across various machine learning domains. Here are some illustrative examples:

- Recommendation Systems:E-commerce platforms like Amazon and Netflix utilize A/B testing to optimize their recommendation engines. They might test different algorithms or recommendation criteria to determine which leads to higher engagement and conversion rates.

- Image Classification:In medical imaging, A/B testing can be used to evaluate different deep learning models for disease detection. Researchers might compare the performance of different models on a dataset of medical images to identify the most accurate and reliable model for diagnosing specific conditions.

- Natural Language Processing (NLP):A/B testing is employed to refine NLP models for tasks like sentiment analysis, machine translation, and chatbot development. For instance, developers might test different word embedding techniques or language models to determine which yields the most accurate sentiment classification results.

2. Key Concepts and Terminology

Let’s dive into some essential terms that are crucial for understanding A/B testing in the context of machine learning. These terms provide a common language for discussing and analyzing experiments, helping you make informed decisions about your models.

Key Terminology in A/B Testing

Here’s a breakdown of key terms used in A/B testing:

- Control Group:The control group represents the baseline or standard version of your machine learning model or system. It doesn’t receive any changes or modifications. Think of it as the reference point for comparison.

- Treatment Group:The treatment group is the experimental group that receives the new or modified version of your machine learning model or system. This is the version you want to test and compare against the control group.

- Conversion Rate:The conversion rate measures the percentage of users or instances that perform a desired action, such as clicking a button, making a purchase, or completing a task. This is a crucial metric for evaluating the effectiveness of your machine learning model.

- Statistical Significance:Statistical significance indicates whether the observed difference between the control and treatment groups is likely due to chance or a real effect of the modification. It helps determine if the observed improvement or change is truly meaningful.

Comparing A/B Testing and Multi-Armed Bandits

A/B testing and multi-armed bandits are both powerful tools for optimizing machine learning models, but they differ in their approach and objectives. Here’s a table comparing these two methods:

| Feature | A/B Testing | Multi-armed Bandits |

|---|---|---|

| Objective | To compare the performance of two or more versions of a model or system to identify the best-performing option. | To find the best-performing option from a set of choices (like different model configurations) by dynamically allocating resources to the most promising options. |

| Experiment Design | Typically involves a fixed allocation of users or instances to control and treatment groups. | Uses an adaptive allocation strategy, continuously updating the allocation based on real-time performance feedback. |

| Data Analysis | Focuses on comparing the performance metrics of the control and treatment groups using statistical tests to determine significance. | Employs reinforcement learning algorithms to learn from past observations and optimize resource allocation. |

| Typical Use Cases | Optimizing website design, user interfaces, email campaigns, and other marketing efforts. | Personalization, recommendation systems, online advertising, and dynamic pricing. |

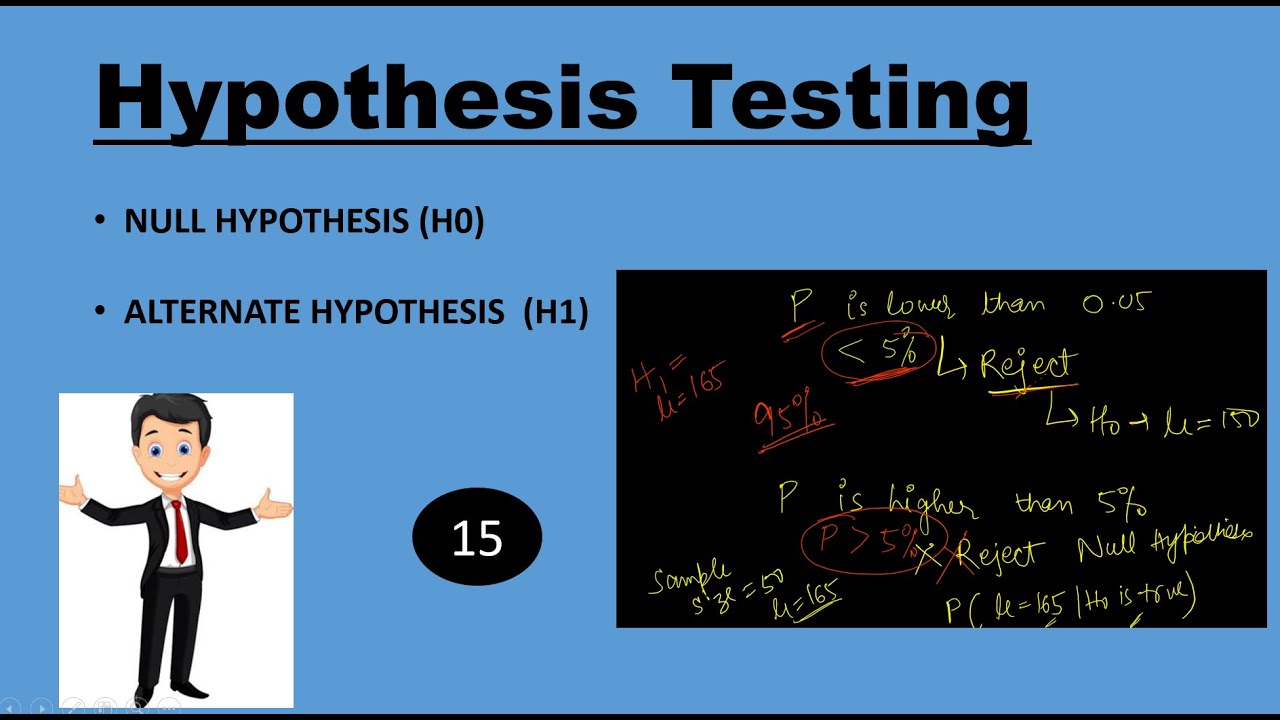

Hypothesis Testing in A/B Testing

Hypothesis testing plays a crucial role in A/B testing. It provides a structured framework for determining whether the observed differences between the control and treatment groups are statistically significant or simply due to random variation.

- Formulating a Hypothesis:The first step is to formulate a clear hypothesis about the expected impact of the modification. For example, “The new model version will lead to a higher conversion rate compared to the control version.” This hypothesis is a statement that you want to test.

- Testing the Hypothesis:The next step involves conducting the A/B test and collecting data from both the control and treatment groups. You then analyze the collected data using statistical tests to determine whether the observed differences support or refute your hypothesis.

- Choosing the Right Statistical Test:The choice of statistical test depends on the type of data you are analyzing, the size of your sample, and the specific hypothesis you are testing. Common statistical tests used in A/B testing include t-tests, chi-squared tests, and ANOVA.

3. Steps Involved in A/B Testing

A/B testing is a powerful technique for optimizing machine learning models and website elements. It involves comparing two versions of a system or element to determine which performs better. To ensure the effectiveness and reliability of A/B tests, a structured approach is essential.

This section Artikels the key steps involved in conducting A/B tests, from defining the hypothesis to analyzing the results and making informed decisions.

Define the Hypothesis

A clear hypothesis is the foundation of any A/B test. It Artikels the expected outcome of the test and the relationship between the control and variant. The hypothesis should be specific, measurable, and achievable.

“Hypothesis: Implementing a new call-to-action button with a more prominent color will increase the click-through rate on our product page by 15% compared to the existing button.”

This hypothesis clearly states the expected outcome (increased click-through rate), the specific change being tested (new button color), and the expected magnitude of the change (15%).

Select the Metric

Choosing the right metric is crucial for measuring the success of an A/B test. The metric should be directly relevant to the hypothesis and the business objectives.

“The primary metric for this A/B test will be the click-through rate (CTR) of the call-to-action button. This metric directly reflects the effectiveness of the button in driving user engagement and is aligned with the goal of increasing conversions.”

While the primary metric is essential, considering secondary metrics can provide additional insights into user behavior. For instance, you might track the time spent on the page, the bounce rate, or the conversion rate to gain a more comprehensive understanding of the test’s impact.

Design the Experiment

Designing the A/B test involves defining the control group, variant group, sample size, and duration. These elements are crucial for ensuring the validity and reliability of the results.

Table:

Element Control Group Variant Group Rationale Call-to-action button [Existing button design] [New button design with prominent color] To test the impact of the color change on user interaction. Sample size [Number of users in control group] [Number of users in variant group] To ensure statistically significant results. Duration [Number of days/weeks] [Number of days/weeks] To allow sufficient time for user behavior to stabilize and data to be collected.

The control group represents the baseline, while the variant group receives the change being tested. The sample size should be large enough to detect statistically significant differences between the groups. The duration of the test should be sufficient to allow for user behavior to stabilize and enough data to be collected for analysis.

Analyze the Results

Analyzing the A/B test results involves collecting data, calculating metrics, performing statistical tests, interpreting the data, and drawing conclusions.

Flowchart:

“`mermaidgraph LRA[Collect Data]

-> BCalculate Metrics

B

-> C[Perform Statistical Tests]

C

-> DInterpret Results

D

A/B testing in machine learning is all about finding the best version of your model. It’s like comparing different recipes to find the one that makes the tastiest cake. You might think, “How hard is it to learn the banjo?” Well, that depends on your dedication and practice.

But just like mastering the banjo, improving your machine learning models takes time and experimentation. So, get out there and start testing!

-> EDraw Conclusions

E

-> FMake Decisions

“`Statistical tests are used to determine whether the observed differences between the control and variant groups are statistically significant. This helps to rule out random fluctuations and ensure that the results are reliable. The interpretation of the data should be objective and based on the statistical analysis.

Finally, conclusions are drawn based on the findings, leading to informed decisions about whether to implement the variant or stick with the control.

4. Metrics for Measuring Performance in A/B Testing

Choosing the right metrics to measure the performance of your machine learning models is crucial for making informed decisions about your A/B tests. By carefully selecting and interpreting these metrics, you can effectively evaluate the impact of different model variations and identify the most effective solution for your specific goals.

Identifying Key Metrics

To effectively evaluate the performance of machine learning models in A/B testing, it is essential to understand and utilize appropriate metrics. Here are five commonly used metrics:

- Accuracy: This metric represents the proportion of correctly classified instances. It is a fundamental measure of model performance, indicating how well the model can predict the correct outcome. A higher accuracy score generally signifies a more accurate model.

- Precision: Precision measures the proportion of correctly predicted positive instances out of all instances predicted as positive. It helps understand the model’s ability to avoid false positives, which is crucial in scenarios where misclassifying a negative instance as positive has significant consequences.

- Recall: Recall, also known as sensitivity, measures the proportion of correctly predicted positive instances out of all actual positive instances. It assesses the model’s ability to identify all positive instances, which is critical in scenarios where missing a positive instance is costly.

- F1-Score: The F1-score is a harmonic mean of precision and recall. It provides a balanced measure of model performance, considering both precision and recall. A high F1-score indicates a good balance between minimizing false positives and false negatives.

- AUC (Area Under the Curve): The AUC is a metric used to evaluate the performance of binary classification models. It represents the area under the receiver operating characteristic (ROC) curve, which plots the true positive rate against the false positive rate at different classification thresholds.

A higher AUC indicates a better model, as it is able to discriminate between positive and negative instances more effectively.

Analyzing Metric Advantages and Disadvantages

Each metric has its advantages and disadvantages, depending on the specific context of the A/B test.

- Accuracy:

- Advantages: It is a simple and widely understood metric, providing a general overview of model performance.

- Disadvantages: It can be misleading in imbalanced datasets, where one class dominates the other. In such cases, a model that simply predicts the majority class can achieve high accuracy, even if it performs poorly on the minority class.

- Precision:

- Advantages: It is useful in scenarios where minimizing false positives is crucial, such as spam detection or fraud detection.

- Disadvantages: It can be misleading when the number of positive instances is low, as a high precision score can be achieved by simply predicting very few positive instances.

- Recall:

- Advantages: It is important in scenarios where identifying all positive instances is crucial, such as medical diagnosis or customer churn prediction.

- Disadvantages: It can be misleading when the number of negative instances is low, as a high recall score can be achieved by simply predicting most instances as positive.

- F1-Score:

- Advantages: It provides a balanced measure of model performance, considering both precision and recall.

- Disadvantages: It is less informative than precision and recall individually, as it combines both metrics into a single score.

- AUC:

- Advantages: It is a robust metric that is not affected by class imbalance. It provides a comprehensive measure of model performance across different classification thresholds.

- Disadvantages: It can be difficult to interpret and compare across different datasets.

Choosing the Right Metrics

The choice of metrics depends on the specific goals of the A/B test. Here is a table outlining different A/B test goals and the corresponding recommended metrics:

| A/B Test Goal | Recommended Metrics |

|---|---|

| Increase Click-Through Rate (CTR) | CTR, Conversion Rate, Click-to-Impression Ratio |

| Improve User Engagement | Session Duration, Page Views, Bounce Rate |

| Optimize Revenue | Revenue Per User, Average Order Value, Customer Lifetime Value |

| Reduce Customer Churn | Churn Rate, Customer Retention Rate, Time to Churn |

| Improve Lead Generation | Lead Conversion Rate, Lead Quality Score, Cost Per Lead |

Practical Application

Imagine you are running an A/B test for a recommendation system on an e-commerce platform. Your goal is to increase the number of product purchases made by users.

- Key Metrics: In this scenario, you would focus on metrics related to conversion and revenue, such as:

- Conversion Rate: The percentage of users who make a purchase after viewing recommendations.

- Average Order Value: The average amount spent by users who make a purchase.

- Revenue Per User: The average revenue generated from each user.

- Interpreting Results: After running the A/B test, you analyze the data and find that the new recommendation system results in a higher conversion rate and average order value compared to the existing system. This indicates that the new system is more effective at driving purchases and generating revenue.

5. Types of A/B Testing in Machine Learning

A/B testing in machine learning isn’t just about comparing two models; it’s about strategically targeting different aspects of your machine learning process to find the most effective solutions. This allows you to fine-tune your model, optimize its performance, and ultimately make better predictions.Let’s delve into the various types of A/B testing, categorized based on the specific aspect of the machine learning model being tested.

Model Architecture Testing

- Description:This type of A/B testing involves comparing the performance of different model architectures. For example, you might test a linear regression model against a decision tree model to see which one performs better on your specific dataset.

- Example:Let’s say you’re building a model to predict customer churn. You could test a simple logistic regression model against a more complex neural network model to see which one provides better accuracy in predicting customer churn. You could use libraries like TensorFlow or PyTorch to implement these models and compare their performance using metrics like accuracy, precision, and recall.

- Benefits:Testing different model architectures can lead to significant improvements in model performance. It allows you to select the model that best fits the complexity of your data and the specific prediction task.

Hyperparameter Tuning

- Description:Hyperparameters are parameters that are set before the training process begins. This type of A/B testing involves comparing the performance of a model trained with different hyperparameter values. For instance, you might test different learning rates or batch sizes for a neural network.

- Example:You’re training a deep learning model for image classification. You could test different values for the learning rate (e.g., 0.01, 0.001, 0.0001) to see how it affects the model’s convergence and performance. You can use libraries like scikit-learn or Optuna to automate the process of hyperparameter tuning.

- Benefits:Optimizing hyperparameters can significantly impact model accuracy, training speed, and generalization ability. By testing different values, you can find the optimal configuration for your model.

Feature Engineering Testing

- Description:Feature engineering involves transforming raw data into features that are more meaningful and informative for the model. This type of A/B testing compares the performance of a model trained with different feature sets. For example, you might test whether adding new features improves the model’s ability to make accurate predictions.

- Example:You’re building a model to predict house prices. You could test the performance of the model with and without features like the number of bedrooms, bathrooms, and square footage. You could use domain knowledge and data exploration techniques to identify potentially useful features.

- Benefits:Effective feature engineering can significantly improve model performance. By testing different feature combinations, you can identify the most relevant and informative features for your model.

Training Data Testing

- Description:This type of A/B testing involves comparing the performance of a model trained on different datasets. This could involve testing different data sources, data cleaning techniques, or even different subsets of the same dataset.

- Example:You’re building a sentiment analysis model. You could test the performance of the model trained on a dataset of movie reviews versus a dataset of product reviews. You could also test different data cleaning techniques to see how they affect model performance.

- Benefits:Testing different training datasets can help you understand how data quality and characteristics impact model performance. This can help you select the best dataset for training your model and improve its generalization ability.

Evaluation Metric Testing

- Description:This type of A/B testing involves comparing the performance of a model using different evaluation metrics. For example, you might test the model’s accuracy against its precision or recall to see which metric is more relevant for your specific application.

- Example:You’re building a fraud detection model. You might test the model’s performance using different metrics like accuracy, precision, and recall. Depending on the cost of false positives and false negatives, you might prioritize different metrics.

- Benefits:Different evaluation metrics highlight different aspects of model performance. By testing different metrics, you can choose the one that best reflects your specific goals and objectives.

Data Augmentation Testing

- Description:Data augmentation involves creating synthetic data from your existing dataset to increase its size and diversity. This type of A/B testing involves comparing the performance of a model trained on the original dataset versus a model trained on the augmented dataset.

- Example:You’re building an image classification model for recognizing different types of animals. You could augment your dataset by rotating, flipping, or cropping existing images to create new, synthetic images. You could use libraries like imgaug or albumentations to implement data augmentation techniques.

- Benefits:Data augmentation can improve model performance by increasing the diversity and size of the training data. This can help the model generalize better to unseen data and reduce overfitting.

Deployment Strategy Testing

- Description:This type of A/B testing involves comparing the performance of a model deployed using different strategies. For example, you might test different deployment platforms, resource allocation methods, or model update frequencies.

- Example:You’ve trained a machine learning model for real-time fraud detection. You could test different deployment strategies like deploying the model on a cloud platform or on-premise servers. You could also test different model update frequencies to see how they affect the model’s responsiveness and accuracy.

- Benefits:Testing different deployment strategies can help you optimize the model’s performance in a real-world setting. This can ensure that the model is deployed efficiently and effectively.

Table of A/B Testing Types

| Type | Description | Example | Benefits |

|---|---|---|---|

| Model Architecture Testing | Comparing the performance of different model architectures. | Testing a logistic regression model against a neural network model for customer churn prediction. | Improved model performance by selecting the architecture that best fits the data and task. |

| Hyperparameter Tuning | Testing different values for hyperparameters like learning rate and batch size. | Testing different learning rates for a deep learning model for image classification. | Optimized model accuracy, training speed, and generalization ability. |

| Feature Engineering Testing | Comparing the performance of a model trained with different feature sets. | Testing the performance of a house price prediction model with and without features like number of bedrooms and bathrooms. | Improved model performance by identifying the most relevant and informative features. |

| Training Data Testing | Comparing the performance of a model trained on different datasets. | Testing the performance of a sentiment analysis model trained on movie reviews versus product reviews. | Understanding how data quality and characteristics impact model performance. |

| Evaluation Metric Testing | Comparing the performance of a model using different evaluation metrics. | Testing a fraud detection model’s performance using accuracy, precision, and recall. | Choosing the evaluation metric that best reflects the specific goals and objectives. |

| Data Augmentation Testing | Comparing the performance of a model trained on the original dataset versus a model trained on the augmented dataset. | Augmenting an image classification dataset by rotating and flipping images to improve model performance. | Improved model performance by increasing the diversity and size of the training data. |

| Deployment Strategy Testing | Comparing the performance of a model deployed using different strategies. | Testing different deployment platforms and model update frequencies for a real-time fraud detection model. | Optimized model performance in a real-world setting by ensuring efficient and effective deployment. |

Tools and Techniques for A/B Testing

Now that we’ve explored the foundational concepts and steps involved in A/B testing, let’s delve into the practical tools and techniques that empower us to conduct these experiments effectively. The right tools can streamline the process, automate tasks, and provide valuable insights to optimize our machine learning models.

Popular A/B Testing Tools

A plethora of tools are available, each with unique features and functionalities tailored to different needs and use cases. Here’s a glimpse into some prominent players in the A/B testing landscape:

- Google Optimize:This popular platform, integrated with Google Analytics, is a user-friendly option for web-based A/B testing. It offers a visual editor for creating variations, powerful analytics dashboards, and seamless integration with Google’s ecosystem.

- Optimizely:Known for its robust feature set, Optimizely is a versatile platform suitable for various A/B testing scenarios. It provides advanced targeting options, real-time results monitoring, and in-depth reporting capabilities.

- VWO:VWO stands out with its focus on personalization and advanced targeting. It allows you to create highly specific variations based on user attributes, behavior, and other factors, making it ideal for personalized experiences.

- AB Tasty:AB Tasty offers a comprehensive suite of tools for A/B testing, including a visual editor, advanced analytics, and a user-friendly interface. Its strong focus on user experience makes it a popular choice for teams seeking a seamless workflow.

- Convert:Convert excels in providing a balance of power and ease of use. It offers a drag-and-drop editor, real-time reporting, and a flexible framework for conducting A/B tests across various channels.

Choosing the Right Tool

The best A/B testing tool depends on your specific needs, budget, and the complexity of your machine learning application. Consider the following factors:

- Ease of Use:Look for a tool with a user-friendly interface that aligns with your team’s technical expertise. Some platforms offer visual editors, while others require more technical knowledge.

- Feature Set:Evaluate the tool’s capabilities, such as targeting options, variation creation, analytics, reporting, and integration with other platforms.

- Scalability:Ensure the tool can handle the volume of data and traffic generated by your machine learning application. Some platforms offer scalable solutions for handling large-scale experiments.

- Pricing:Consider the cost of the tool, including subscription fees, usage-based charges, and any additional features.

Techniques for Effective A/B Testing

Beyond choosing the right tools, several techniques can enhance the effectiveness of your A/B tests:

- Statistical Significance:Ensure your tests are statistically significant, meaning the observed differences between variations are not due to random chance. Use appropriate statistical methods, such as t-tests or chi-square tests, to determine significance.

- Sample Size:A sufficient sample size is crucial for reliable results. Determine the required sample size based on the expected effect size and the desired level of statistical power.

- Variation Design:Create meaningful variations that address specific hypotheses and test different aspects of your machine learning model. Avoid introducing too many changes at once, as it can be challenging to isolate the impact of each variation.

- Iterative Approach:Adopt an iterative approach to A/B testing, continuously refining your model based on the results of each experiment. This allows for continuous improvement and optimization.

Best Practices for A/B Testing

A/B testing is a powerful tool for optimizing machine learning models, but getting the most out of it requires careful planning and execution. This section will delve into best practices for designing, conducting, and analyzing A/B tests to ensure reliable and actionable results.

Experiment Design

The success of your A/B test hinges on a well-designed experiment. The following guidelines help ensure your experiment is robust and produces meaningful insights:

- Define a Clear Objective:Before you start, clearly define what you want to achieve with the A/B test. This could be improving model accuracy, reducing latency, or increasing user engagement. A well-defined objective will guide your experiment design and help you interpret the results.

- Choose the Right Metrics:Select metrics that directly measure your objective. For example, if you want to improve model accuracy, use metrics like precision, recall, or F1-score. Avoid using too many metrics, as it can make it difficult to draw clear conclusions.

- Control for Variables:Isolate the variable you’re testing by controlling for other factors that could influence the outcome. For example, if you’re testing a new feature, ensure the user experience is consistent across both the control and treatment groups. This minimizes the risk of confounding variables skewing your results.

- Determine Sample Size:The number of users or data points included in your experiment directly affects the statistical significance of your results. A larger sample size increases the power of your test and reduces the risk of false positives or negatives. Use statistical power analysis to determine the appropriate sample size for your experiment.

- Randomize Treatment Assignment:Randomly assign users or data points to the control and treatment groups to ensure a fair comparison. This eliminates bias and helps you isolate the impact of the change you’re testing.

Data Collection and Analysis

Data collection and analysis are crucial for drawing meaningful conclusions from your A/B test:

- Track Data Accurately:Ensure you collect all the necessary data for your chosen metrics. This might involve logging user interactions, model predictions, or other relevant data points. Accuracy in data collection is paramount for reliable results.

- Use Appropriate Statistical Tests:Select statistical tests that are appropriate for your data and experiment design. This could include t-tests, chi-square tests, or other relevant tests depending on your metrics and sample size. Choose tests that provide accurate p-values and confidence intervals to assess the statistical significance of your results.

- Analyze Data with Caution:Don’t jump to conclusions based on early or incomplete data. It’s important to collect enough data to ensure statistical significance and avoid drawing false conclusions. Consider using statistical tools and techniques to analyze your data and interpret the results correctly.

Common Pitfalls to Avoid

Several common pitfalls can compromise the validity of your A/B tests. Be aware of these and take steps to avoid them:

- Premature Termination:Ending an A/B test prematurely can lead to inaccurate conclusions. Ensure you collect enough data to achieve statistical significance before drawing conclusions.

- Ignoring Baseline Performance:Always compare your results to the baseline performance of your current model or system. This helps you understand whether the change you’re testing is actually an improvement or not.

- Overfitting to the Data:Ensure your model is not overfitting to the data used in your A/B test. This can happen if you’re not using enough data or if your model is too complex. Overfitting can lead to inaccurate results and poor generalization to new data.

- Failing to Account for Seasonality or Other External Factors:Be aware of seasonal variations or other external factors that might influence your results. For example, user behavior during holidays might be different from normal days. Account for these factors to avoid drawing misleading conclusions.

Challenges and Considerations

While A/B testing is a powerful tool for optimizing machine learning models, it comes with its own set of challenges and considerations that must be carefully addressed to ensure ethical and responsible experimentation. This section delves into the potential challenges and limitations associated with A/B testing in machine learning, discusses ethical considerations and bias mitigation strategies, and explains how to address data privacy concerns and ensure responsible experimentation.

Ethical Considerations and Bias Mitigation

Ethical considerations are paramount in A/B testing, particularly when dealing with sensitive data or applications. It is crucial to ensure that experiments are conducted responsibly and do not perpetuate existing biases or harm individuals or groups.

- Fairness and Equity: A/B testing should strive to ensure fairness and equity in treatment across different groups. It is important to consider how different groups might be affected by the variations being tested and to avoid creating or exacerbating existing disparities.

- Transparency and Accountability: Transparency is essential in A/B testing. Clearly documenting the experimental design, metrics used, and results allows for scrutiny and accountability. This helps to build trust and ensure that experiments are conducted ethically.

- Bias Mitigation: Machine learning models can inherit biases from the data they are trained on. It is crucial to identify and mitigate biases during the A/B testing process. This involves techniques like data preprocessing, feature engineering, and model evaluation to ensure that the results are not influenced by unfair or discriminatory factors.

Data Privacy and Responsible Experimentation

Data privacy is a critical concern in A/B testing, especially when dealing with sensitive information. It is essential to implement measures to protect user data and ensure responsible experimentation.

- Data Anonymization and De-identification: To protect user privacy, data should be anonymized or de-identified before being used in A/B testing. This involves removing personally identifiable information (PII) from the data.

- Data Minimization: Only collect and use the data that is absolutely necessary for the A/B test. This principle helps to minimize the risk of data breaches and protects user privacy.

- Informed Consent: When possible, obtain informed consent from users before including their data in A/B testing. This ensures transparency and allows users to make informed decisions about their data.

- Data Security and Access Control: Implement robust data security measures to protect user data from unauthorized access, use, or disclosure. Control access to data based on need-to-know principles.

Case Studies and Real-World Applications

A/B testing has been instrumental in driving improvements in various machine learning applications. Let’s explore some real-world examples that showcase the power of A/B testing in optimizing machine learning models and achieving tangible results.

Personalized Recommendations

Personalized recommendations are crucial for many businesses, especially e-commerce platforms.

- Case Study: NetflixNetflix, the streaming giant, extensively uses A/B testing to optimize its recommendation engine. By testing different algorithms and recommendation strategies, they aim to personalize the content suggested to each user.

- Challenge:To improve user engagement and retention by providing highly relevant recommendations.

- Approach:Netflix implemented A/B testing on its recommendation system, comparing different algorithms and features. They tested various factors, such as the weight assigned to different user preferences, the influence of popularity, and the inclusion of diverse content categories.

- Outcome:Through A/B testing, Netflix achieved a significant increase in user engagement and satisfaction. They observed a notable rise in the number of hours spent watching content, as well as a reduction in churn rate.

- Case Study: AmazonAmazon, the e-commerce behemoth, leverages A/B testing to refine its product recommendations and personalize the shopping experience for its customers.

- Challenge:To enhance the shopping experience by presenting relevant product recommendations and increasing sales conversions.

- Approach:Amazon conducts A/B tests on its recommendation system, comparing different recommendation algorithms and product display strategies. They experiment with factors like the number of recommendations displayed, the product categories included, and the use of personalized product suggestions.

- Outcome:By implementing A/B testing, Amazon achieved a notable increase in product sales and customer satisfaction. They observed a higher click-through rate on product recommendations and a rise in overall purchase conversions.

Fraud Detection, A/b testing machine learning

Fraud detection is a critical aspect of many businesses, especially in financial institutions.

- Case Study: PayPalPayPal, the online payment platform, utilizes A/B testing to enhance its fraud detection system.

- Challenge:To minimize fraudulent transactions while ensuring a seamless user experience.

- Approach:PayPal implemented A/B testing on its fraud detection model, comparing different algorithms and feature sets. They tested various factors, such as the inclusion of behavioral data, the use of machine learning models, and the thresholds for triggering fraud alerts.

- Outcome:Through A/B testing, PayPal achieved a significant reduction in fraudulent transactions while maintaining a low rate of false positives. They observed a decrease in fraudulent activity and an improvement in the accuracy of their fraud detection system.

- Case Study: MastercardMastercard, the global payment network, employs A/B testing to optimize its fraud detection system and ensure secure transactions.

- Challenge:To detect fraudulent transactions in real-time while minimizing the impact on legitimate transactions.

- Approach:Mastercard conducts A/B tests on its fraud detection model, comparing different algorithms and feature sets. They experiment with factors like the use of real-time data, the inclusion of behavioral patterns, and the threshold for triggering fraud alerts.

- Outcome:By implementing A/B testing, Mastercard achieved a significant reduction in fraudulent transactions while minimizing false positives. They observed a decrease in fraudulent activity and an improvement in the accuracy of their fraud detection system.

Image Recognition

Image recognition technology is used in various applications, such as self-driving cars, medical imaging, and object detection.

- Case Study: Google PhotosGoogle Photos, the image storage and sharing service, uses A/B testing to optimize its image recognition capabilities.

- Challenge:To improve the accuracy and efficiency of its image recognition algorithms, enabling more accurate image tagging and search functionality.

- Approach:Google Photos implemented A/B testing on its image recognition models, comparing different algorithms and feature sets. They tested various factors, such as the use of deep learning models, the inclusion of contextual information, and the optimization of training data.

- Outcome:Through A/B testing, Google Photos achieved a significant improvement in the accuracy of its image recognition system. They observed a higher accuracy in image tagging and a more efficient search experience for users.

- Case Study: Tesla AutopilotTesla Autopilot, the self-driving car feature, uses A/B testing to improve its object detection and lane keeping capabilities.

- Challenge:To enhance the accuracy and reliability of its object detection and lane keeping algorithms, ensuring a safe and efficient driving experience.

- Approach:Tesla conducts A/B tests on its Autopilot system, comparing different algorithms and feature sets. They experiment with factors like the use of sensor data, the inclusion of environmental information, and the optimization of training data.

- Outcome:By implementing A/B testing, Tesla achieved a significant improvement in the accuracy and reliability of its Autopilot system. They observed a decrease in accidents and an enhancement in the overall driving experience.

10. Future Trends and Developments in A/B Testing for Machine Learning

The field of A/B testing in machine learning is rapidly evolving, with new technologies and methodologies emerging constantly. These advancements are transforming how we design, execute, and interpret A/B tests, leading to more efficient and effective experiments.

A/B Testing in Specific Machine Learning Domains

A/B testing is being applied to improve the performance of machine learning models in various domains, including Natural Language Processing (NLP), Computer Vision, and Recommender Systems.

- Natural Language Processing (NLP): A/B testing is used to evaluate and optimize language models, chatbots, and sentiment analysis tools. For example, A/B testing can be used to compare different approaches to text preprocessing, feature extraction, or model architecture in natural language understanding tasks.

- Computer Vision: A/B testing presents challenges and opportunities in image recognition, object detection, and video analysis. For instance, A/B tests can be used to compare different image augmentation techniques, object detection algorithms, or video classification models.

- Recommender Systems: A/B testing is crucial for optimizing the accuracy and relevance of recommendation algorithms. For example, A/B tests can be used to evaluate different recommendation strategies, such as collaborative filtering, content-based filtering, or hybrid approaches.

New A/B Testing Methodologies for Machine Learning

Emerging methodologies are enhancing the efficiency and effectiveness of A/B testing for machine learning models.

- Bandit Algorithms: Bandit algorithms are used to optimize the exploration-exploitation trade-off in A/B testing for machine learning. They allow for dynamic allocation of traffic to different versions of a model, continuously learning and adapting to the best performing version.

- Bayesian Optimization: Bayesian optimization is used to design more efficient and effective A/B tests by leveraging prior knowledge and probabilistic models. It helps to reduce the number of experiments needed to find optimal settings for machine learning models.

- Multi-Armed Bandit Testing: Multi-armed bandit testing is a framework for comparing different machine learning models and hyperparameter settings. It allows for the simultaneous evaluation of multiple options, dynamically allocating resources to the most promising candidates.

Impact of New Technologies and Techniques on A/B Testing

Emerging technologies are transforming A/B testing in machine learning by addressing key challenges and unlocking new possibilities.

- Explainable AI (XAI): XAI techniques can be integrated with A/B testing to provide insights into the reasons behind performance differences between different versions of a machine learning model. This enhances the interpretability and transparency of A/B testing results.

- Federated Learning: Federated learning enables A/B tests on decentralized datasets, allowing for privacy-preserving experimentation on sensitive data. This is particularly relevant in scenarios where data is distributed across multiple devices or organizations.

- Generative Adversarial Networks (GANs): GANs can be used to generate synthetic data for A/B testing, reducing the need for large and expensive real-world datasets. This is particularly useful in scenarios where real-world data is limited or costly to collect.

Q&A: A/b Testing Machine Learning

What are some common pitfalls to avoid in A/B testing?

Some common pitfalls include: small sample sizes, insufficient test duration, failing to account for confounding variables, and misinterpreting statistical significance.

How can I ensure that my A/B tests are ethical?

Ethical considerations are crucial. Ensure your experiments are fair, transparent, and don’t discriminate against any groups. You should also be mindful of data privacy and security.

What are some popular tools for A/B testing in machine learning?

Some popular tools include Google Optimize, Optimizely, VWO, and AB Tasty. These platforms offer features for designing, running, and analyzing A/B tests.